Public PostgreSQL?#

I want a tool that can easily ingest arbitrary data that I can use for personal data science.

At first I was thinking of running Postgres publicly - of course with TLS enabled and strict access control.

For this to be viable, authorization attempts need to be rate limited. There must be no way to brute force the password!

Maybe a Postgres connection pooler like PgBouncer or Pgpool-II could do the job, but I found nothing matching my exact use case. PgBouncer can limit connections in general. That’s something I could abuse, but it seems overkill for such a simple thing.

Alternatives I considered, but refused:

fail2ban, because I am not keen on keeping rules up to date

iptables, because I am not keen on keeping rules up to date

PostgREST takes the easy way out and uses Nginx’ leaky bucket to do rate limiting for their HTTP endpoints.

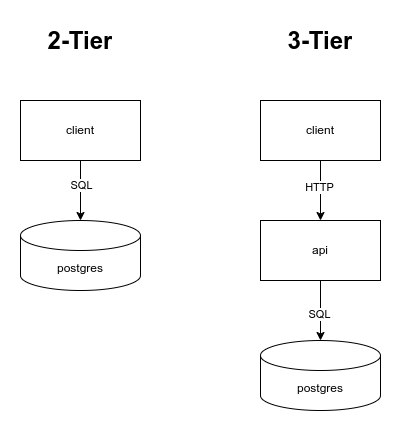

Maybe that’s the way to go. REST via HTTP has some big benefits - especially on an architectural level, as one can see here.

We get many features like

statelessness (for scale)

caching (for speed)

a huge ecosystem (authentication, rate limiting, access control, …)

ease of use (any HTTP client really)

The biggest drawbacks are

increased complexity (the HTTP layer has to developed and maintained)

We are forced to map HTTP to SQL, thus creating an abstraction that is not necessary for this case, though this can also be a good thing, depending on how you look at it.

Basically it comes down to this:

Note that the 3-Tier whould have at least four running processes, because for the “web” box’ rate limiting feature, you’d need a reverse proxy.